#ufos

| January 18th, 2026#philosophersden

| January 12th, 2026#conspiracy-facts

| January 9th, 2026Fri Jan 9 13:13:25 2026

(*4e3d9761*):: https://x.com/DD_Geopolitics/status/2009404076820426941

*** DD Geopolitics (@DD_Geopolitics) on X

*** Argentinians are discovering that the MASSIVE wildfires in Patagonia are being caused by Israelis.

This is a crazy developing story.

*** X (formerly Twitter)

(*4e3d9761*):: https://x.com/DD_Geopolitics/status/2009404076820426941

*** DD Geopolitics (@DD_Geopolitics) on X

*** Argentinians are discovering that the MASSIVE wildfires in Patagonia are being caused by Israelis.

This is a crazy developing story.

*** X (formerly Twitter)

(*4297a328*):: +public! Lord help them repent, because if they don’t they’re going to have a problem.

(*4e3d9761*):: https://x.com/PamphletsY/status/2009499586864533927

*** ★★★★★ Pamphlets ★★★★★ (@PamphletsY) on X

*** :rotating_light::flag-ar: :flag-il: BREAKING — Israelis “Tourists” started Argentina’s Wildfires.

*** X (formerly Twitter)

#conspiracy-facts

| January 2nd, 2026Fri Jan 2 14:15:23 2026

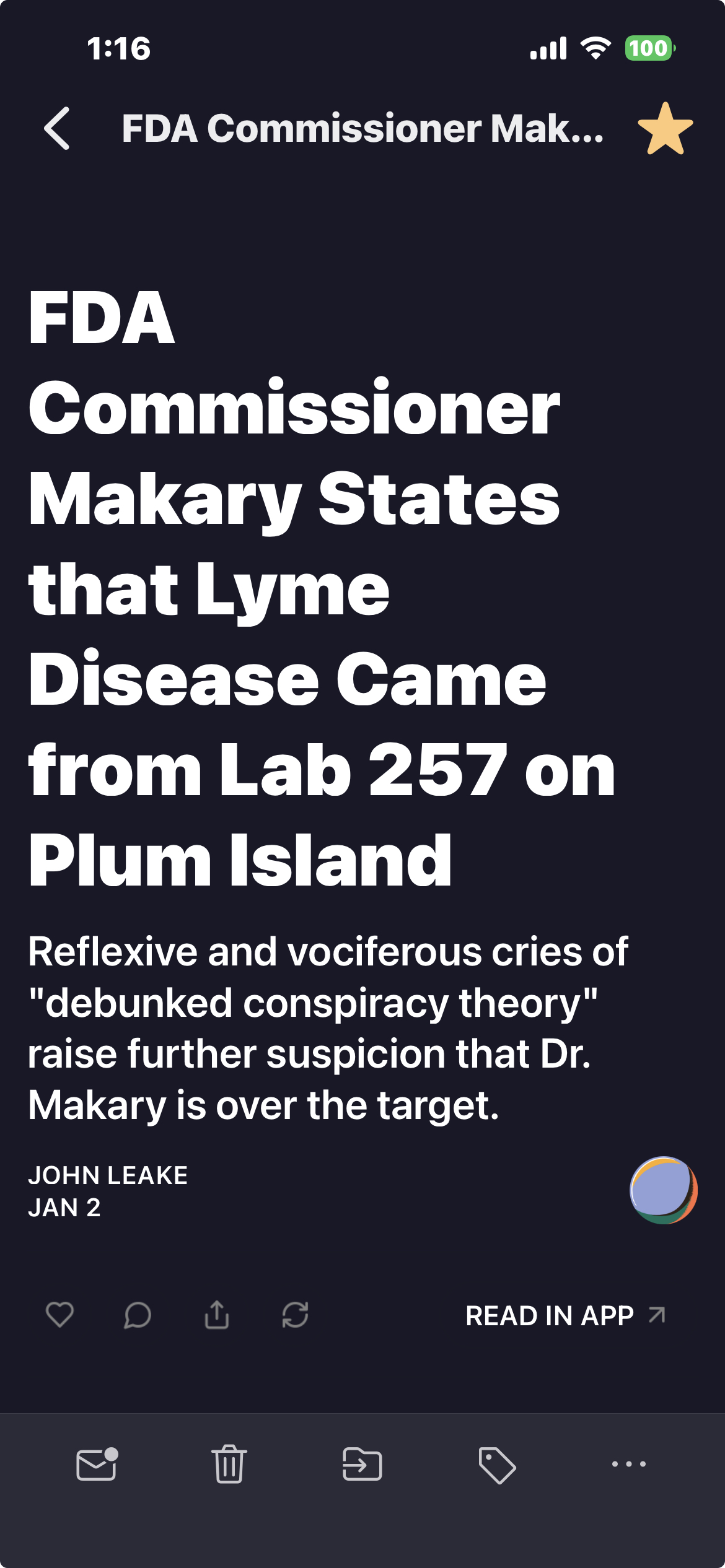

(*b05743e5*):: https://www.thefocalpoints.com/p/fda-commissioner-makary-states-that

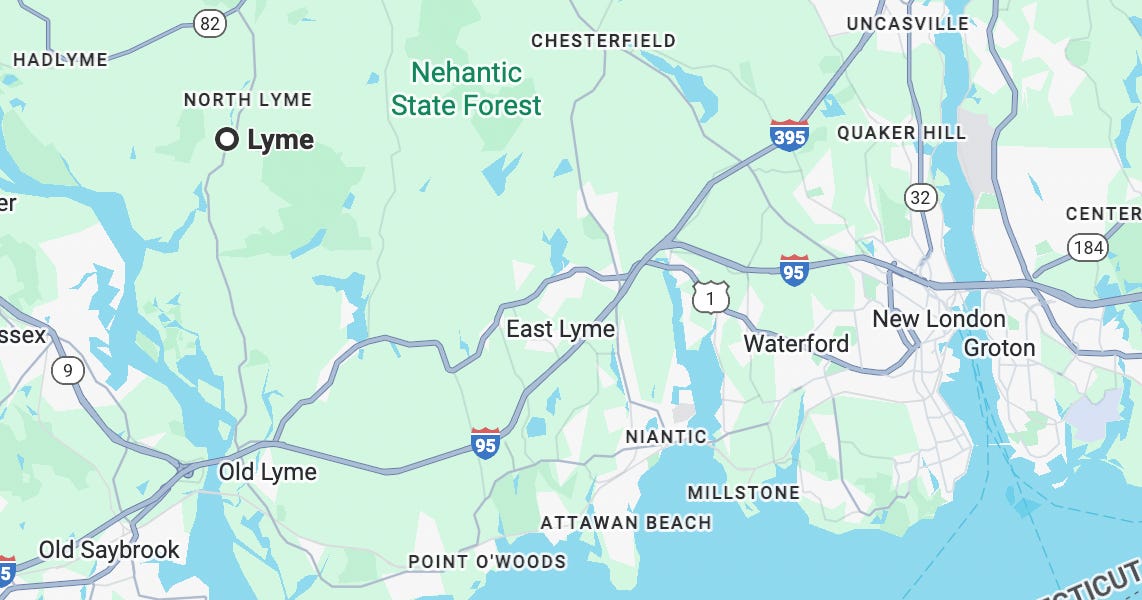

*** FDA Commissioner Makary States that Lyme Disease Came from Lab 257 on Plum Island

*** Reflexive and vociferous cries of “debunked conspiracy theory” raise further suspicion that Dr. Makary is over the target.

*** thefocalpoints.com

(*4297a328*):: +public!

(*b05743e5*):: Reminds me of the pictures of the Montauk Monster washed up on the beach, it looked photoshopped af, but maybe it was real and also from Plum Island…

(*4297a328*):: Sounds like a case for #cryptozookeepers

#conspiracy-facts

| January 2nd, 2026#conspiracy-facts

| January 1st, 2026Thu Jan 1 00:32:16 2026

(*53e37792*):: +public!

#conspiracy-facts

| December 29th, 2025Mon Dec 29 03:31:35 2025

(*4e3d9761*):: https://x.com/NotKennyRogers/status/2005378480775840112

*** NotKennyRogers (@NotKennyRogers) on X

*** The only Democrat in Minnesota to vote against giving state-funded health care to illegal immigrants was shot and killed at her home 166 days ago.

*** X (formerly Twitter)

(*4297a328*):: +public! Wow

#flatcoiners

| December 26th, 2025Fri Dec 26 16:16:18 2025

(*4297a328*):: “Reduce harm” really means “make jokes less funny” +public!

#ufos

| December 23rd, 2025Tue Dec 23 14:34:21 2025

(*4e3d9761*):: https://x.com/UAPJames/status/2003204311699042773

*** UAP James (@UAPJames) on X

*** Palmer Luckey says UAPs likely come from the distant past, understanding them will render current technology ‘irrelevant’

“There’s a lot we don’t understand about these vehicles — What they are, where or when they come from.”

“If and when we figure out what’s going on with

*** X (formerly Twitter)

(*4297a328*):: Palmer – you goof! Pick up your bible! +public!